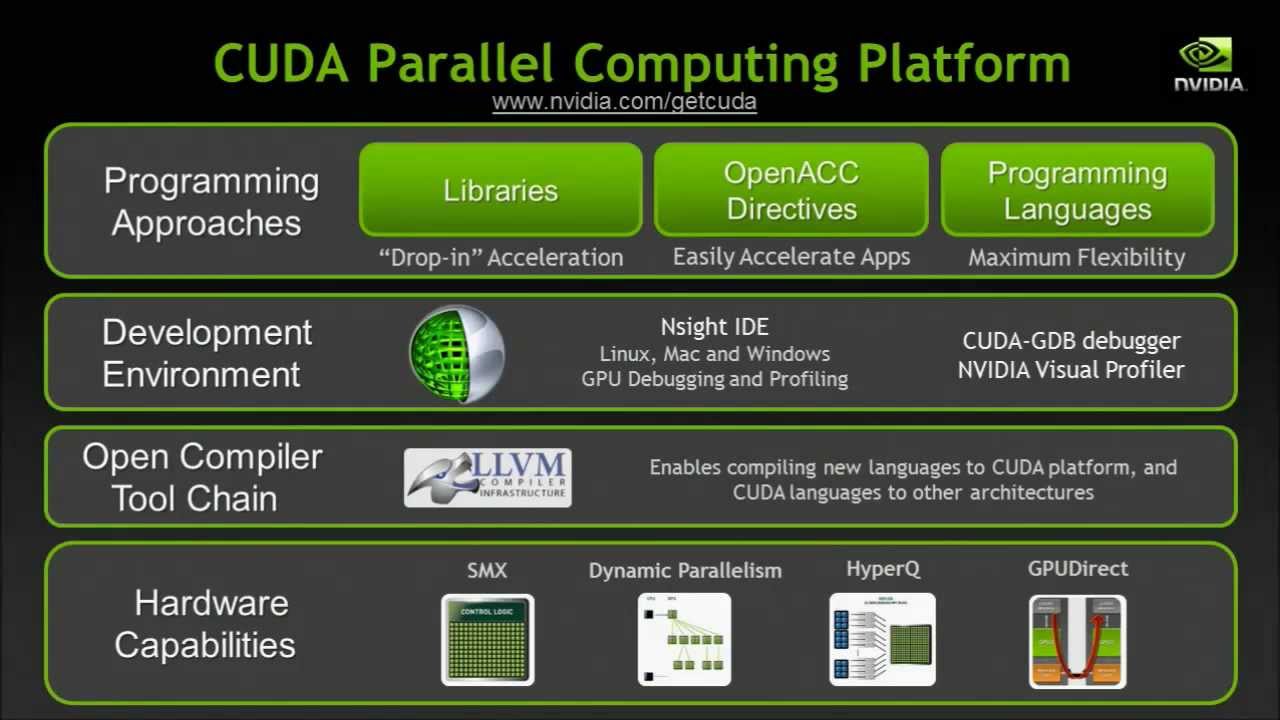

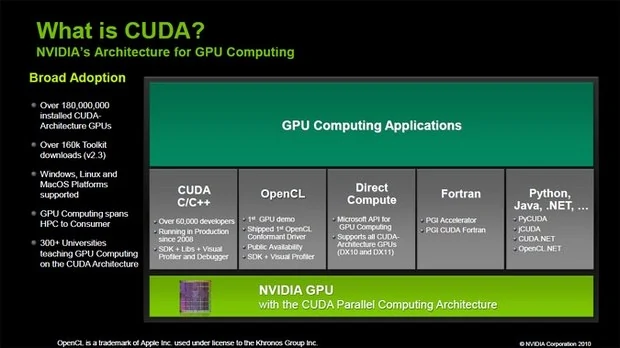

Cuda

import torch

torch.cuda.is_available()

torch.zeros(1).cuda()

torch.__version__

pytrch: Pytorch

nvidia-smi

model.device

More

import streamlit as st

import datetime

st.title("Form for the Users")

st.write("Here, you can answer to some questions in this form.")

user_id = st.text_input("ID", value="Your ID", max_chars=7)

age = st.number_input("Age", min_value=18, max_value=100, step=1)

b_date = st.date_input("Date of Birth", min_value=datetime.date(1921, 1, 1), max_value=datetime.date(2033, 12, 31))

smoke = st.checkbox("Do you smoke?")

genre = st.radio("Which movie genre do you like?", options=['horror', 'adventure', 'romantic'])

weight = st.slider("Choose your weight", min_value=40., max_value=150., step=0.5)

p_form = st.selectbox("Select level of your physical condition", options=["Bad", "Normal", "Good"])

colors = st.multiselect('What are your favorite colors', options=['Green', 'Yellow', 'Red', 'Blue', 'Pink'])

info = st.text_area("Share some information about you", "Put information here", help='You can write about your hobbies or family')

image = st.file_uploader("Upload your photo", type=['jpg', 'png'])

click = st.sidebar.button('Click me!')

if click:

st.sidebar.write("You clicked the button")

col1, col2 = st.columns(2)

with col1:

st.image("https://static.streamlit.io/examples/cat.jpg", width=300)

st.button("Like cats")

with col2:

st.image("https://static.streamlit.io/examples/dog.jpg", width=355)

st.button("Like dogs")

submit = st.button("Submit")

if submit:

st.write("You submitted the form")

from langchain.vectorstores import Chroma

persist_directory = 'vector_store'

vectordb = Chroma.from_documents(

documents=split_docs,

embedding=embedding,

persist_directory=persist_directory

)

To be able to load data from disk when you need it next time, execute the following command.

embedding = OpenAIEmbeddings()

vectordb = Chroma(

persist_directory=persist_directory,

embedding_function=embedding

)

The database initialisation might take a couple of minutes since Chroma needs to load all documents and

get their embeddings using OpenAI API.

We can see that all documents have been loaded.

print(vectordb._collection.count())

12890

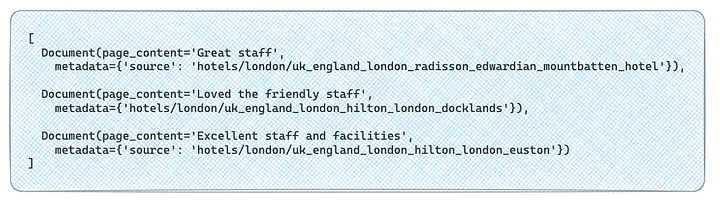

Now, we could use a similarity search to find top customer comments about staff politeness.

query_docs = vectordb.similarity_search('politeness of staff', k=3)

Documents look pretty relevant to the question.

We have stored our customer comments in an accessible way, and it’s time to discuss retrieval in more

detail.

We have stored our customer comments in an accessible way, and it’s time to discuss retrieval in more

detail.

vectordb.similarity_search to retrieve the most related chunks to the

question. In most cases, such an approach will work for you, but there could be some nuances:

- Lack of diversity — The model might return extremely close texts (even duplicates), which won’t add

much new information to LLM.

- Not taking into account metadata — similarity_search doesn’t take into account the metadata

information we have. For example, if I query the top-5 comments for the question “breakfast in

Travelodge Farringdon”, only three comments in the result will have the source equal to

uk_england_london_travelodge_london_farringdon.

- Context size limitation — as usual, we have limited LLM context size and need to fit our documents

into it.

Let’s discuss techniques that could help us to solve these problems.

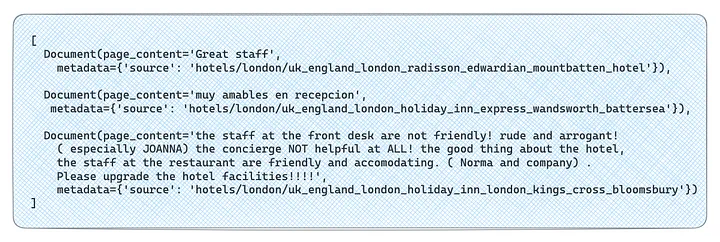

Addressing Diversity — MMR (Maximum Marginal Relevance)

fetch_k the most similar docs to the question using

similarity_search.

Then, we picked up k the most diverse among them.

If we want to use MMR, we should use max_marginal_relevance_search instead of similarity_search and

specify fetch_k number. It’s worth keeping fetch_k relatively small so that you don’t have irrelevant

answers in the output. That’s it.

query_docs = vectordb.max_marginal_relevance_search('politeness of staff',

k = 3, fetch_k = 30)

Let’s look at the examples for the same query. We got more diverse feedback this time. There’s even a

comment with negative sentiment.

query_docs = vectordb.similarity_search('breakfast in Travelodge Farrigdon',

k=5,

filter = {'source': 'hotels/london/uk_england_london_travelodge_london_farringdon'}

)

Now, let’s try to use LLM to come up with such a filter automatically. We need to describe all our

metadata parameters in detail and then use SelfQueryRetriever.

from langchain.llms import OpenAI

from langchain.retrievers.self_query.base import SelfQueryRetriever

from langchain.chains.query_constructor.base import AttributeInfo

metadata_field_info = [

AttributeInfo(

name="source",

description="All sources starts with 'hotels/london/uk_england_london_' \

then goes hotel chain, constant 'london_' and location.",

type="string",

)

]

document_content_description = "Customer reviews for hotels"

llm = OpenAI(temperature=0.1) # low temperature to make model more factual

# by default 'text-davinci-003' is used

retriever = SelfQueryRetriever.from_llm(

llm,

vectordb,

document_content_description,

metadata_field_info,

verbose=True

)

question = "breakfast in Travelodge Farringdon"

docs = retriever.get_relevant_documents(question, k = 5)

Our case is tricky since the source parameter in the metadata consists of multiple fields: country,

city, hotel chain and location. It’s worth splitting such complex parameters into more granular ones in

such situations so that the model can easily understand how to use metadata filters.

However, with a detailed prompt, it worked and returned only documents related to Travelodge Farringdon.

But I must confess, it took me several iterations to achieve this result.

Let’s switch on debug and see how it works. To enter debug mode, you just need to execute the code

below.

import langchain

langchain.debug = True

The complete prompt is pretty long, so let’s look at the main parts of it. Here’s the prompt’s start,

which gives the model an overview of what we expect and the main criteria for the result.

Then, the few-shot prompting technique is used, and the model is provided with two examples of input and

expected output. Here’s one of the examples.

We are not using a chat model like ChatGPT but general LLM (not fine-tuned on instructions). It’s

trained just to predict the following tokens for the text. That’s why we finished our prompt with our

question and the string Structured output: expecting the model to provide the answer.