You can customise the split even further. For example, you could specify length_function = lambda x: len(x.split("\n")) to use the number of paragraphs as the chunk length instead of the number of characters. It’s also quite common to split by tokens because LLMs have limited context sizes based on the number of tokens.

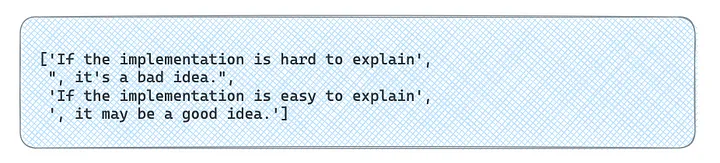

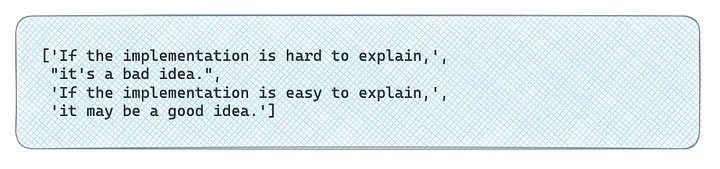

The other potential customisation is to use other separators to prefer to split by "," instead of " " . Let’s try to use it with a couple of sentences.

It works, but commas are not in the right places.

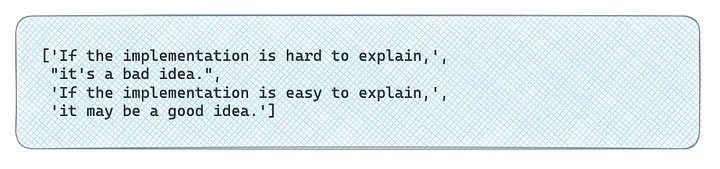

To fix this issue, we could use regexp with lookback as a separator.

text_splitter = RecursiveCharacterTextSplitter(

chunk_size = 50,

chunk_overlap = 0,

length_function = len,

is_separator_regex = True,

separators=["\n\n", "\n", "(?<=\, )", " ", ""]

)

text_splitter.split_text('''\

If the implementation is hard to explain, it's a bad idea.

If the implementation is easy to explain, it may be a good idea.''')

Now it’s fixed.

Also, LangChain provides tools for working with code so that your texts are split based on separators specific to programming languages.

However, in our case, the situation is more straightforward. We know we have individual independent comments delimited by "\n" in each file, and we just need to split by it. Unfortunately, LangChain doesn’t support such a basic use case, so we need to do a bit of hacking to make it work as we want to.

from langchain.text_splitter import CharacterTextSplitter

text_splitter = CharacterTextSplitter(

separator = "\n",

chunk_size = 1,

chunk_overlap = 0,

length_function = lambda x: 1, # hack - usually len is used

is_separator_regex = False

)

split_docs = text_splitter.split_documents(docs)

len(split_docs)

12890

You can find more details on why we need a hack here in my previous article about LangChain.

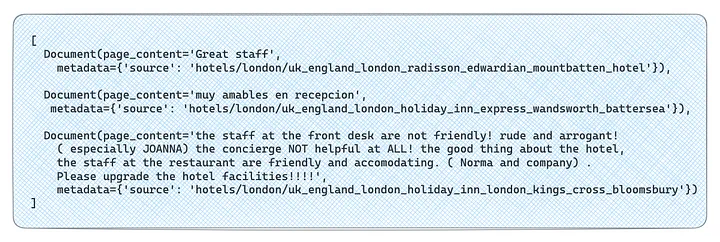

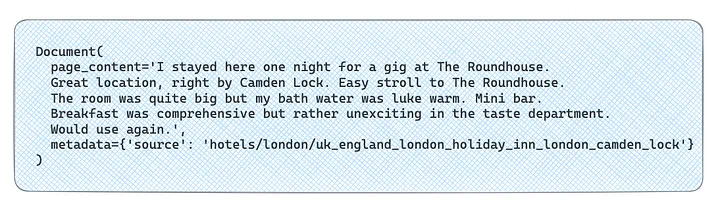

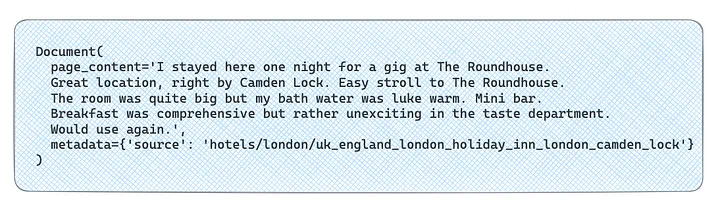

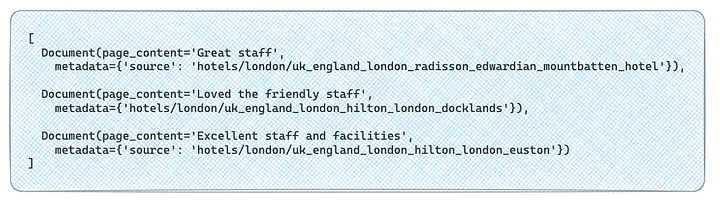

The significant part of the documents is metadata since it can give more context about where this chunk came from. In our case, LangChain automatically populated the source parameter for metadata so that we know which hotel each comment is related to.

There are some other approaches (i.e. for HTML or Markdown) that add titles to metadata while splitting documents. These methods could be quite helpful if you’re working with such data types.

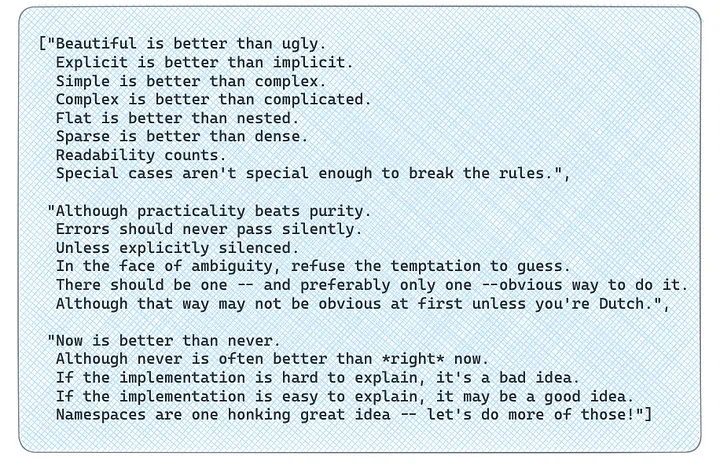

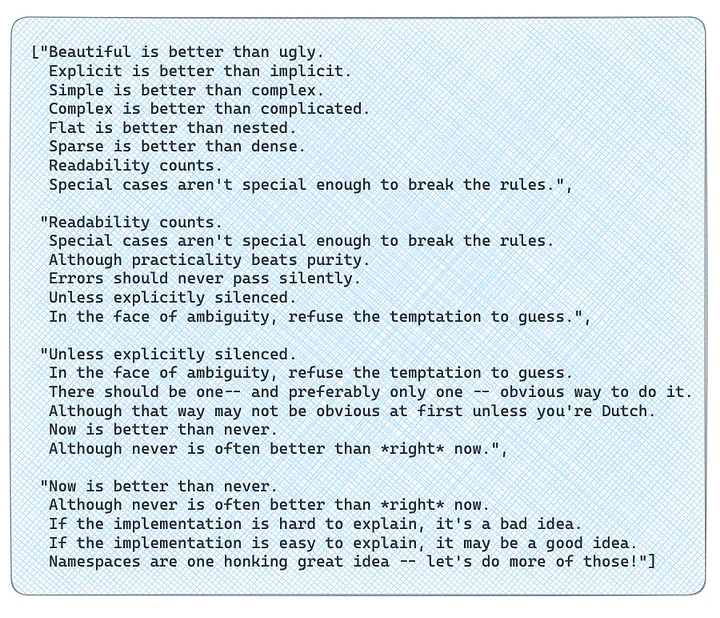

Let’s try to add chunk_overlap.

Let’s try to add chunk_overlap.

Let’s make the chunk size a bit smaller.

Let’s make the chunk size a bit smaller. You can customise the split even further. For example, you could specify length_function = lambda x: len(x.split("\n")) to use the number of paragraphs as the chunk length instead of the number of characters. It’s also quite common to split by tokens because LLMs have limited context sizes based on the number of tokens.

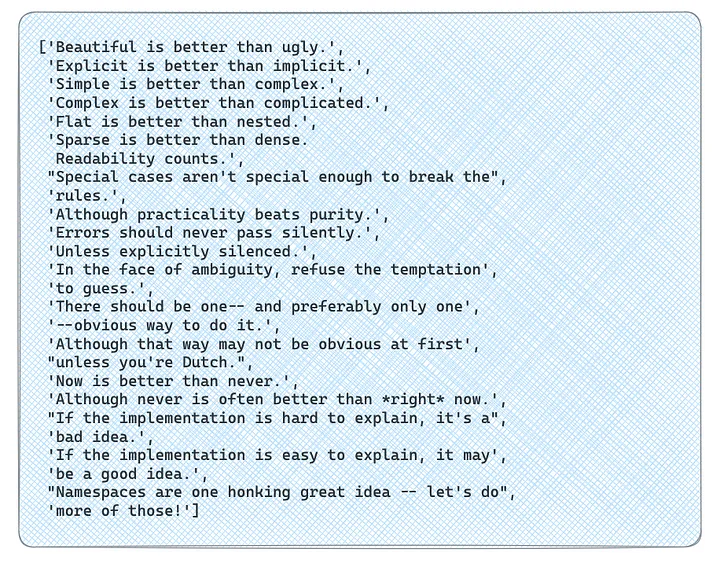

The other potential customisation is to use other separators to prefer to split by "," instead of " " . Let’s try to use it with a couple of sentences.

You can customise the split even further. For example, you could specify length_function = lambda x: len(x.split("\n")) to use the number of paragraphs as the chunk length instead of the number of characters. It’s also quite common to split by tokens because LLMs have limited context sizes based on the number of tokens.

The other potential customisation is to use other separators to prefer to split by "," instead of " " . Let’s try to use it with a couple of sentences.

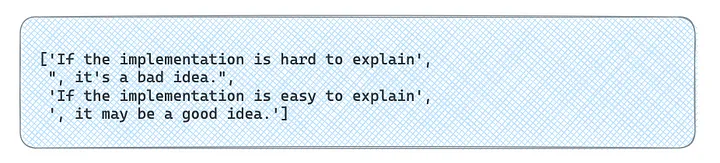

To fix this issue, we could use regexp with lookback as a separator.

To fix this issue, we could use regexp with lookback as a separator.

Also, LangChain provides tools for working with code so that your texts are split based on separators specific to programming languages.

However, in our case, the situation is more straightforward. We know we have individual independent comments delimited by "\n" in each file, and we just need to split by it. Unfortunately, LangChain doesn’t support such a basic use case, so we need to do a bit of hacking to make it work as we want to.

Also, LangChain provides tools for working with code so that your texts are split based on separators specific to programming languages.

However, in our case, the situation is more straightforward. We know we have individual independent comments delimited by "\n" in each file, and we just need to split by it. Unfortunately, LangChain doesn’t support such a basic use case, so we need to do a bit of hacking to make it work as we want to.

There are some other approaches (i.e. for HTML or Markdown) that add titles to metadata while splitting documents. These methods could be quite helpful if you’re working with such data types.

There are some other approaches (i.e. for HTML or Markdown) that add titles to metadata while splitting documents. These methods could be quite helpful if you’re working with such data types.

We have stored our customer comments in an accessible way, and it’s time to discuss retrieval in more detail.

We have stored our customer comments in an accessible way, and it’s time to discuss retrieval in more detail.